This page offers guidance for running Ansys Electronics Desktop using an LSF Job Scheduler (command line; without GUI) in our CAD Compute Cluster.

More information about the Load Sharing Facility (LSF) job scheduler is provided here.

Our Cluster operates using the Linux operating system. We provide some basic information about Linux and Linux commands here and here.

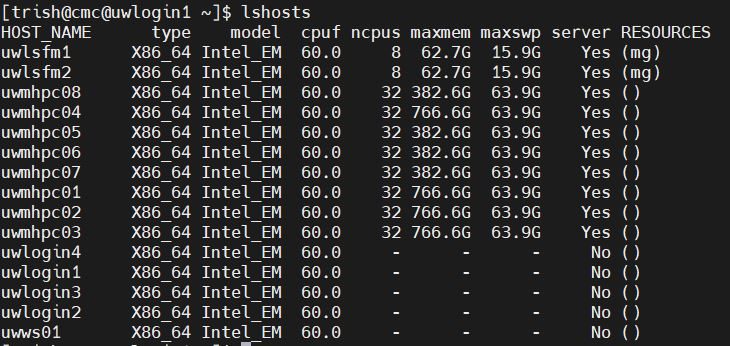

CMC’s CAD Compute Cluster nodes can be displayed by typing lshosts at a command prompt in the environment as shown in Figure 1. You will see four login nodes (uwlogin*), two management nodes (uwlsfm*; 8 cores and 63 GB of RAM each), and eight compute nodes in the list (uwmhpc*; 32 cores and hundreds of GB of RAM).

Figure 1: The Nodes in the CAD Compute Cluster

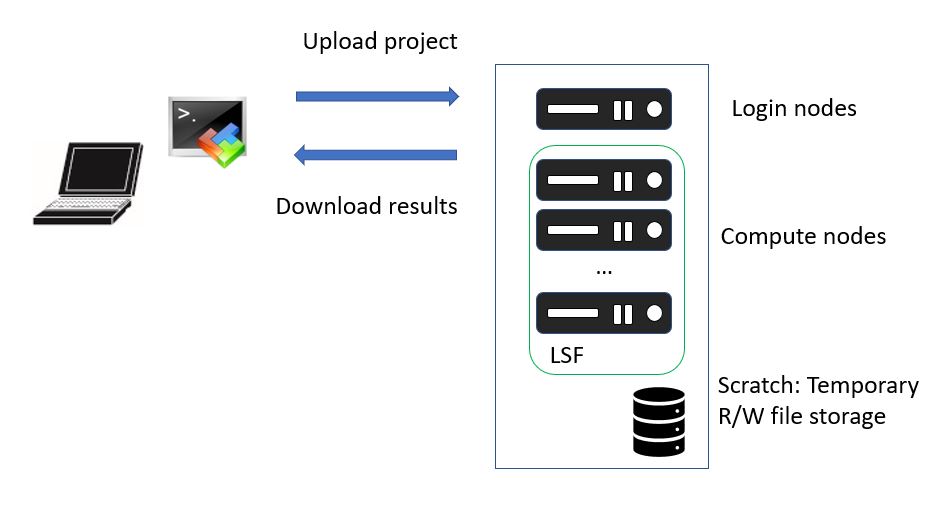

When you run a simulation on the Cluster, you submit your simulation request to an LSF queue. This step is common to all CAD software installed on CMC’s Cluster – Ansys, COMSOL, Cadence and others. The job scheduler will try to assign the hardware (CPU cores and RAM) you ask for in your *.sh shell script to your simulation run, up to a limit of 32 cores. This limit has been set by CMC IT staff, to prevent abuse of Cluster privileges.

Figure 2: A Block Diagram of Cluster Operation

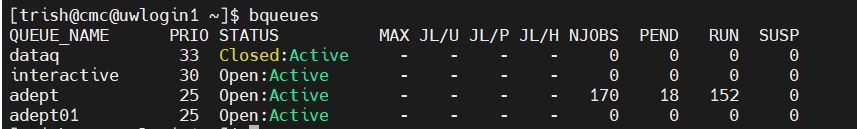

Use the bqueues command to discover queue names. The letter ‘b’ in the command bqueues means that these queues run simulations in batches or batch mode. Any command starting with ‘b’ also means that LSF will recognize it as one it should perform.

Figure 3: The Queues in the CAD Compute Cluster

Summary: It is possible to run Ansys Electronics Desktop (EDT) software by creating a script containing all the commands that are to be given to Ansys, and then submitting this script to the LSF job scheduler for completion.

Here are the steps that have been tested in the Cluster to show how this is done.

For these instructions, a prepared project supplied by Ansys support staff was used (a bandpass filter, with filename bp_filter.aedt). You will find it in the list of examples included with your local Ansys EDT installation.

- Log into the Cluster using one of the VCAD Cloud instances called CAD Compute Cluster.

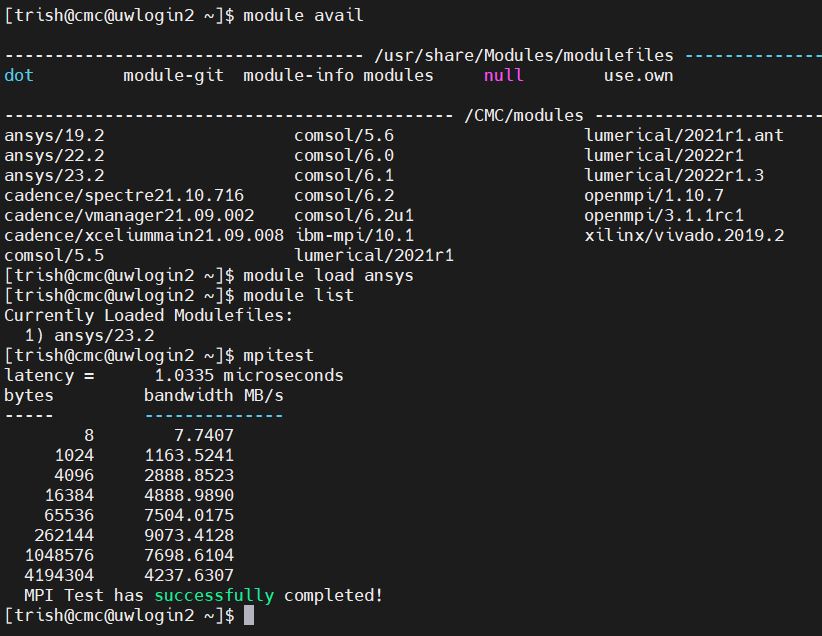

- Enter module load ansys in your terminal window. This will link the default version of Ansys to the LSF job scheduler. You can choose another version if you prefer.

- Type module list to confirm that the software has been linked to LSF.

- Use the mpitest command to verify Message Passing Interface (MPI) software function. If you want to know more about MPI’s role in simulation, your Ansys Help files have this information.

Figure 4: Summary of Set-up Commands to Run Ansys

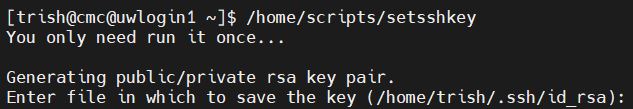

5. Use CMC’s setsshkey utility located under /home/scripts to generate an SSH passcode. You only have to do this once, when you first set up your workspace on the Cluster. For simplicity, do not enter a password and leave the result in the default location. This is usually your home directory on a Cluster login node.

Figure 5: Generate an SSH Passkey for Each Node Using the Script

6. Create a shell file to enter LSF batch submission (bsub) and the Ansys batch commands. See the Appendix A below for an example (“my_ansys_hfss.sh”). Note that you will have to define the use of Ansys HPC pool licenses in a registry.txt file in the batchoptions segment of the script.

7. Upload your project file(s) to your working directory. We provide instructions for using MobaXterm to perform a secure file transfer (sftp) upload on the Cluster pages.

8. Upload your shell file (e.g. “my_ansys_hfss.sh”) to the same directory.

Ensure that the body of your shell file does not contain extraneous Windows editor characters (e.g. an Enter or carriage return).

9. Use the command bsub < my_ansys_hfss.sh at a prompt to run a simulation.

10. When your simulations have completed, download your results to your local computer for review.

During simulation runs, use commands such as bjobs -l and bhosts to monitor your simulation progress. For example:

~$ bjobs -l

… or

~$ bhosts

… where ~$ is a command prompt in a terminal window.

Appendix A

This is an example script that uses Ansys 2023 R2 for batch simulations.

Any text after a single # is visible to the LSF Job Scheduler and will be read as a possible command by LSF. Any text after ## is treated as a comment by both LSF and Ansys software; that is,

## This is a comment for both LSF and Ansys

If using this example as a template, insert your assigned CMC Cluster user name in place of *user_name* below. This name is usually a 5-digit number.

——————————————————————————————

#!/usr/bin/env sh

## Sets the Linux shell to Bash

## Commands to be read by LSF start with #BSUB

## — Name of the job —

#BSUB -J ansys_HFSS_example

## — specify queue from the bqueues list —

#BSUB -q adept

## — specify the number of processors to a maximum of 32 —

#BSUB -n 24

## — Specify the output and error files written by LSF. %J is the job ID —

## — -o and -e mean append, -oo and-eo mean overwrite —

#BSUB -oo BPFilter_%J.out

#BSUB -eo BPFilter_%J.err

## — example of an Ansys command line call —

/CMC/tools/ansys/ansys.2023r2/v232/Linux64/ansysedt -distributed -machinelist numcores=24 -auto NumDistributedVariations=1 -monitor -ng -batchoptions registry.txt -batchsolve /home/*user_name*/bp_filter.aedt

——————————————————————————————

The file registry.txt included in the command line call above contains a direct reference to the anshpc license feature called ‘pool’. Use of a registry file is described in the Ansys help files.

Note: There is also a “/scratch” directory variable in this file. With each iteration of a solution, Ansys creates temporary files where it stores data after it finishes one set of calculations. These data become the starting points for the next round of calculations, and so on, until a solution is reached. These temporary files are overwritten by fresh data each time, and they can be quite large/numerous, so a scratch disk is provided on the Cluster for this activity.

Here is a copy of the example registry file contents.

——————————————————————————————

$begin ‘Config’

‘HPCLicenseType’=‘pool’

‘TempDirectory’=’/scratch/*user_name*@cmc’

## — include other desired registry entries here —

$end ‘Config’

——————————————————————————————